Enrico's blog

Abstract

Debusine manages scheduling and distribution of Debian-related tasks (package build, lintian analysis, autopkgtest runs, etc.) to distributed worker machines. It is being developed by Freexian with the intention of giving people access to a range of pre-configured tools and workflows running on remote hardware.

Freexian obtained STF funding for a substantial set of Debusine milestones, so development is happening on a clear schedule. We can present where we are and, we're going to be, and what we hope to bring to Debian with this work.

Abstract

Although Debian has just turned 30, in my experience it has not yet fully turned adult: we sometimes squabble like boys in puberty, like children we assume that someone takes care of paying the bills and bringing out the trash, we procrastinate on our responsibilities and hope nobody notices.

At the same time, we cannot assume that people have the energy and motivation to do what is needed to keep the house clean and the boat afloat: Debian is based on people volunteering, and people have diverse and changing reasons to be with us, and private lives, loved ones and families, bills to be paid.

I want to start figuring out how to address practical issues around the sustainability of the Debian community, in a way that fits the needs and peculiarities of the Debian community.

The end does not justify the means: really, the means define what the end will be. I want to talk about the means: how to be sustainable, how to be interesting, how to be fun, how to have a community worth caring for, how to last for centuries

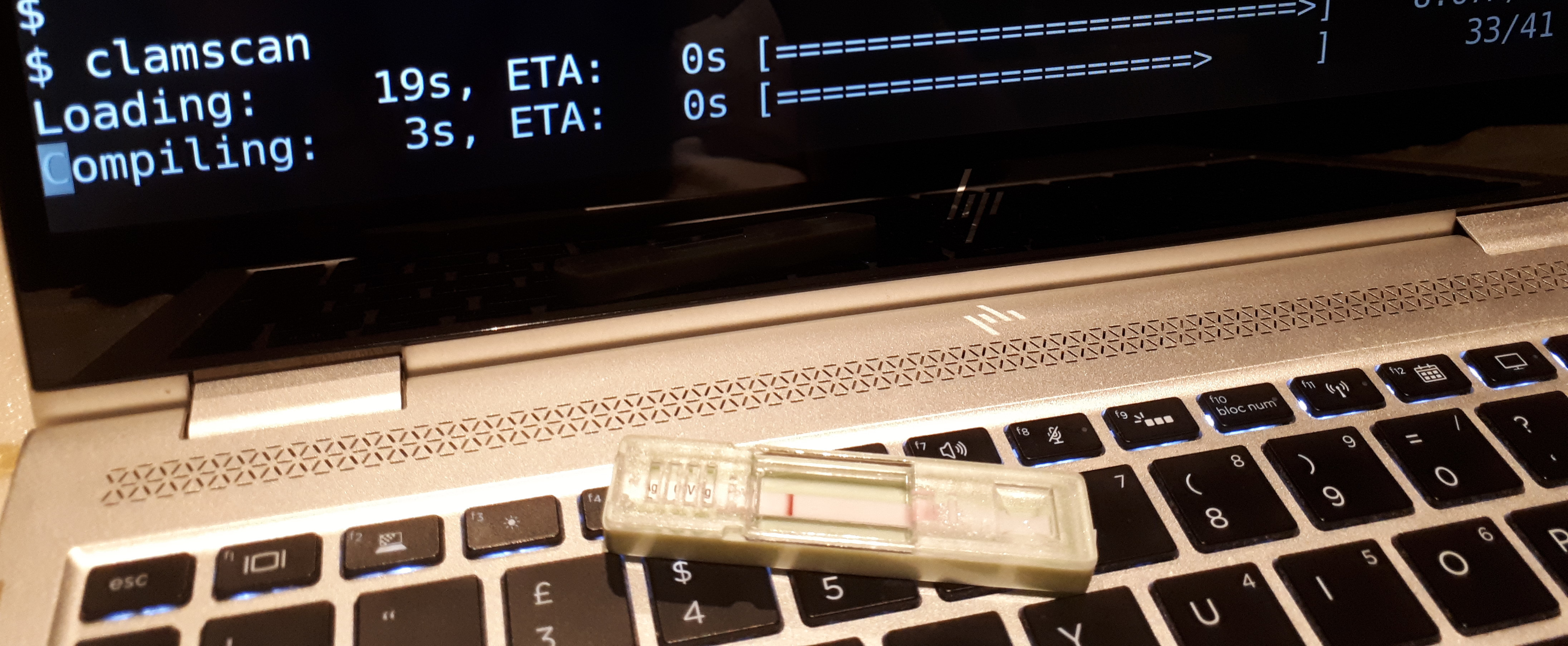

Debian: when you're more likely to get a virus than your laptop

Uhm, salsa is not resolving:

$ git fetch

ssh: Could not resolve hostname salsa.debian.org: Name or service not known

fatal: Could not read from remote repository.

$ ping salsa.debian.org

ping: salsa.debian.org: Name or service not known

But... it is?

$ host salsa.debian.org

salsa.debian.org has address 209.87.16.44

salsa.debian.org has IPv6 address 2607:f8f0:614:1::1274:44

salsa.debian.org mail is handled by 10 mailly.debian.org.

salsa.debian.org mail is handled by 10 mitropoulos.debian.org.

salsa.debian.org mail is handled by 10 muffat.debian.org.

It really is resolving correctly at each step:

$ cat /etc/resolv.conf

# This is /run/systemd/resolve/stub-resolv.conf managed by man:systemd-resolved(8).

# Do not edit.

# [...]

# Run "resolvectl status" to see details about the uplink DNS servers

# currently in use.

# [...]

nameserver 127.0.0.53

options edns0 trust-ad

search fritz.box

$ host salsa.debian.org 127.0.0.53

Using domain server:

Name: 127.0.0.53

Address: 127.0.0.53#53

Aliases:

salsa.debian.org has address 209.87.16.44

salsa.debian.org has IPv6 address 2607:f8f0:614:1::1274:44

salsa.debian.org mail is handled by 10 mailly.debian.org.

salsa.debian.org mail is handled by 10 muffat.debian.org.

salsa.debian.org mail is handled by 10 mitropoulos.debian.org.

# resolvectl status

Global

Protocols: +LLMNR +mDNS -DNSOverTLS DNSSEC=no/unsupported

resolv.conf mode: stub

Link 3 (wlp108s0)

Current Scopes: DNS LLMNR/IPv4 LLMNR/IPv6

Protocols: +DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 192.168.178.1

DNS Servers: 192.168.178.1 fd00::3e37:12ff:fe99:2301 2a01:b600:6fed:1:3e37:12ff:fe99:2301

DNS Domain: fritz.box

Link 4 (virbr0)

Current Scopes: none

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Link 9 (enxace2d39ce693)

Current Scopes: DNS LLMNR/IPv4 LLMNR/IPv6

Protocols: +DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 192.168.178.1

DNS Servers: 192.168.178.1 fd00::3e37:12ff:fe99:2301 2a01:b600:6fed:1:3e37:12ff:fe99:2301

DNS Domain: fritz.box

$ host salsa.debian.org 192.168.178.1

Using domain server:

Name: 192.168.178.1

Address: 192.168.178.1#53

Aliases:

salsa.debian.org has address 209.87.16.44

salsa.debian.org has IPv6 address 2607:f8f0:614:1::1274:44

salsa.debian.org mail is handled by 10 muffat.debian.org.

salsa.debian.org mail is handled by 10 mitropoulos.debian.org.

salsa.debian.org mail is handled by 10 mailly.debian.org.

$ host salsa.debian.org fd00::3e37:12ff:fe99:2301 2a01:b600:6fed:1:3e37:12ff:fe99:2301

Using domain server:

Name: fd00::3e37:12ff:fe99:2301

Address: fd00::3e37:12ff:fe99:2301#53

Aliases:

salsa.debian.org has address 209.87.16.44

salsa.debian.org has IPv6 address 2607:f8f0:614:1::1274:44

salsa.debian.org mail is handled by 10 muffat.debian.org.

salsa.debian.org mail is handled by 10 mitropoulos.debian.org.

salsa.debian.org mail is handled by 10 mailly.debian.org.

Could it be caching?

# systemctl restart systemd-resolved

$ dpkg -s nscd

dpkg-query: package 'nscd' is not installed and no information is available

$ git fetch

ssh: Could not resolve hostname salsa.debian.org: Name or service not known

fatal: Could not read from remote repository.

Could it be something in ssh's config?

$ grep salsa ~/.ssh/config

$ ssh git@salsa.debian.org

ssh: Could not resolve hostname salsa.debian.org: Name or service not known

Something weird with ssh's control sockets?

$ strace -fo /tmp/zz ssh git@salsa.debian.org

ssh: Could not resolve hostname salsa.debian.org: Name or service not known

enrico@ploma:~/lavori/legal/legal$ grep salsa /tmp/zz

393990 execve("/usr/bin/ssh", ["ssh", "git@salsa.debian.org"], 0x7ffffcfe42d8 /* 54 vars */) = 0

393990 connect(3, {sa_family=AF_UNIX, sun_path="/home/enrico/.ssh/sock/git@salsa.debian.org:22"}, 110) = -1 ENOENT (No such file or directory)

$ strace -fo /tmp/zz1 ssh -S none git@salsa.debian.org

ssh: Could not resolve hostname salsa.debian.org: Name or service not known

$ grep salsa /tmp/zz1

394069 execve("/usr/bin/ssh", ["ssh", "-S", "none", "git@salsa.debian.org"], 0x7ffd36cbfde8 /* 54 vars */) = 0

How is ssh trying to resolve salsa.debian.org?

393990 socket(AF_UNIX, SOCK_STREAM|SOCK_CLOEXEC|SOCK_NONBLOCK, 0) = 3

393990 connect(3, {sa_family=AF_UNIX, sun_path="/run/systemd/resolve/io.systemd.Resolve"}, 42) = 0

393990 sendto(3, "{\"method\":\"io.systemd.Resolve.Re"..., 99, MSG_DONTWAIT|MSG_NOSIGNAL, NULL, 0) = 99

393990 mmap(NULL, 135168, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7f4fc71ca000

393990 recvfrom(3, 0x7f4fc71ca010, 135152, MSG_DONTWAIT, NULL, NULL) = -1 EAGAIN (Resource temporarily unavailable)

393990 ppoll([{fd=3, events=POLLIN}], 1, {tv_sec=119, tv_nsec=999917000}, NULL, 8) = 1 ([{fd=3, revents=POLLIN}], left {tv_sec=119, tv_nsec=998915689})

393990 recvfrom(3, "{\"error\":\"io.systemd.System\",\"pa"..., 135152, MSG_DONTWAIT, NULL, NULL) = 56

393990 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0

393990 close(3) = 0

393990 munmap(0x7f4fc71ca000, 135168) = 0

393990 getpid() = 393990

393990 write(2, "ssh: Could not resolve hostname "..., 77) = 77

Something weird with resolved?

$ resolvectl query salsa.debian.org

salsa.debian.org: resolve call failed: Lookup failed due to system error: Invalid argument

Let's try disrupting what ssh is trying and failing:

# mv /run/systemd/resolve/io.systemd.Resolve /run/systemd/resolve/io.systemd.Resolve.backup

$ strace -o /tmp/zz2 ssh -S none -vv git@salsa.debian.org

OpenSSH_9.2p1 Debian-2, OpenSSL 3.0.9 30 May 2023

debug1: Reading configuration data /home/enrico/.ssh/config

debug1: /home/enrico/.ssh/config line 1: Applying options for *

debug1: /home/enrico/.ssh/config line 228: Applying options for *.debian.org

debug1: Reading configuration data /etc/ssh/ssh_config

debug1: /etc/ssh/ssh_config line 19: include /etc/ssh/ssh_config.d/*.conf matched no files

debug1: /etc/ssh/ssh_config line 21: Applying options for *

debug2: resolving "salsa.debian.org" port 22

ssh: Could not resolve hostname salsa.debian.org: Name or service not known

$ tail /tmp/zz2

394748 prctl(PR_CAPBSET_READ, 0x29 /* CAP_??? */) = -1 EINVAL (Invalid argument)

394748 munmap(0x7f27af5ef000, 164622) = 0

394748 rt_sigprocmask(SIG_BLOCK, [HUP USR1 USR2 PIPE ALRM CHLD TSTP URG VTALRM PROF WINCH IO], [], 8) = 0

394748 futex(0x7f27ae5feaec, FUTEX_WAKE_PRIVATE, 2147483647) = 0

394748 openat(AT_FDCWD, "/run/systemd/machines/salsa.debian.org", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory)

394748 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0

394748 getpid() = 394748

394748 write(2, "ssh: Could not resolve hostname "..., 77) = 77

394748 exit_group(255) = ?

394748 +++ exited with 255 +++

$ machinectl list

No machines.

# resolvectl flush-caches

$ resolvectl query salsa.debian.org

salsa.debian.org: resolve call failed: Lookup failed due to system error: Invalid argument

# resolvectl reset-statistics

$ resolvectl query salsa.debian.org

salsa.debian.org: resolve call failed: Lookup failed due to system error: Invalid argument

# resolvectl reset-server-features

$ resolvectl query salsa.debian.org

salsa.debian.org: resolve call failed: Lookup failed due to system error: Invalid argument

# resolvectl monitor

→ Q: salsa.debian.org IN A

→ Q: salsa.debian.org IN AAAA

← S: EINVAL

← A: debian.org IN NS sec2.rcode0.net

← A: debian.org IN NS sec1.rcode0.net

← A: debian.org IN NS nsp.dnsnode.net

← A: salsa.debian.org IN A 209.87.16.44

← A: debian.org IN NS dns4.easydns.info

I guess I won't be using salsa today, and I wish I understood why.

Update: as soon as I pushed this post to my blog (via ssh) salsa started resolving again.

Gtk4 has interesting ways of splitting models and views. One that I didn't find very well documented, especially for Python bindings, is a set of radio buttons backed by a common model.

The idea is to define an action that takes a string as a state. Each radio button is assigned a string matching one of the possible states, and when the state of the backend action is changed, the radio buttons are automatically updated.

All the examples below use a string for a value type, but anything can be used

that fits into a GLib.Variant.

The model

This defines the action. Note that enables all the usual declarative ways of a status change:

mode = Gio.SimpleAction.new_stateful(

name="mode-selection",

parameter_type=GLib.VariantType("s"),

state=GLib.Variant.new_string(""))

gtk_app.add_action(self.mode)

The view

def add_radio(model: Gio.SimpleAction, id: str, label: str):

button = Gtk.CheckButton(label=label)

# Tell this button to activate when the model has the given value

button.set_action_target_value(GLib.Variant.new_string(id))

# Build the name under which the action is registesred, plus the state

# value controlled by this button: clicking the button will set this state

detailed_name = Gio.Action.print_detailed_name(

"app." + model.get_name(),

GLib.Variant.new_string(id))

button.set_detailed_action_name(detailed_name)

# If the model has no current value set, this sets the first radio button

# as selected

if not model.get_state().get_string():

model.set_state(GLib.Variant.new_string(id))

Accessing the model

To read the currently selected value:

current = model.get_state().get_string()

To set the currently selected value:

model.set_state(GLib.Variant.new_string(id))

I acquired some unusual input devices to experiment with, like a CNC control panel and a bluetooth pedal page turner.

These identify and behave like a keyboard, sending nice and simple keystrokes, and can be accessed with no drivers or other special software. However, their keystrokes appear together with keystrokes from normal keyboards, which is the expected default when plugging in a keyboard, but not what I want in this case.

I'd also like them to be readable via evdev and accessible by my own user.

Here's the udev rule I cooked up to handle this use case:

# Handle the CNC control panel

SUBSYSTEM=="input", ENV{ID_VENDOR}=="04d9", ENV{ID_MODEL}=="1203", \

OWNER="enrico", ENV{ID_INPUT}=""

# Handle the Bluetooth page turner

SUBSYSTEM=="input", ENV{ID_BUS}=="bluetooth", ENV{LIBINPUT_DEVICE_GROUP}=="*/…mac…", ENV{ID_INPUT_KEYBOARD}="1" \

OWNER="enrico", ENV{ID_INPUT}="", SYMLINK+="input/by-id/bluetooth-…mac…-kbd"

SUBSYSTEM=="input", ENV{ID_BUS}=="bluetooth", ENV{LIBINPUT_DEVICE_GROUP}=="*/…mac…", ENV{ID_INPUT_TABLET}="1" \

OWNER="enrico", ENV{ID_INPUT}="", SYMLINK+="input/by-id/bluetooth-…mac…-tablet"

The bluetooth device didn't have standard rules to create /dev/input/by-id/

symlinks so I added them. In my own code, I watch /dev/input/by-id with

inotify to handle when devices appear or disappear.

I used udevadm info /dev/input/event… to see what I could use to identify the

device.

The Static device configuration via udev page of libinput's documentation has documentation on the various elements specific to the input subsystem

Grepping rule files in /usr/lib/udev/rules.d was useful to see syntax

examples.

udevadm test /dev/input/event… was invaluable for syntax checking and testing

my rule file while working on it.

Finally, this is an extract of a quick prototype Python code to read keys from the CNC control panel:

import libevdev

KEY_MAP = {

libevdev.EV_KEY.KEY_GRAVE: "EMERGENCY",

# InputEvent(EV_KEY, KEY_LEFTALT, 1)

libevdev.EV_KEY.KEY_R: "CYCLE START",

libevdev.EV_KEY.KEY_F5: "SPINDLE ON/OFF",

# InputEvent(EV_KEY, KEY_RIGHTCTRL, 1)

libevdev.EV_KEY.KEY_W: "REDO",

# InputEvent(EV_KEY, KEY_LEFTALT, 1)

libevdev.EV_KEY.KEY_N: "SINGLE STEP",

# InputEvent(EV_KEY, KEY_LEFTCTRL, 1)

libevdev.EV_KEY.KEY_O: "ORIGIN POINT",

libevdev.EV_KEY.KEY_ESC: "STOP",

libevdev.EV_KEY.KEY_KPPLUS: "SPEED UP",

libevdev.EV_KEY.KEY_KPMINUS: "SLOW DOWN",

libevdev.EV_KEY.KEY_F11: "F+",

libevdev.EV_KEY.KEY_F10: "F-",

libevdev.EV_KEY.KEY_RIGHTBRACE: "J+",

libevdev.EV_KEY.KEY_LEFTBRACE: "J-",

libevdev.EV_KEY.KEY_UP: "+Y",

libevdev.EV_KEY.KEY_DOWN: "-Y",

libevdev.EV_KEY.KEY_LEFT: "-X",

libevdev.EV_KEY.KEY_RIGHT: "+X",

libevdev.EV_KEY.KEY_KP7: "+A",

libevdev.EV_KEY.KEY_Q: "-A",

libevdev.EV_KEY.KEY_PAGEDOWN: "-Z",

libevdev.EV_KEY.KEY_PAGEUP: "+Z",

}

class KeyReader:

def __init__(self, path: str):

self.path = path

self.fd: IO[bytes] | None = None

self.device: libevdev.Device | None = None

def __enter__(self):

self.fd = open(self.path, "rb")

self.device = libevdev.Device(self.fd)

return self

def __exit__(self, exc_type, exc, tb):

self.device = None

self.fd.close()

self.fd = None

def events(self) -> Iterator[dict[str, Any]]:

for e in self.device.events():

if e.type == libevdev.EV_KEY:

if (val := KEY_MAP.get(e.code)):

yield {

"name": val,

"value": e.value,

"sec": e.sec,

"usec": e.usec,

}

Edited: added rules to handle the Bluetooth page turner

- str.endswith() can take a tuple of possible endings instead of a single string

About JACK and Debian

- There are 3 JACK implementations: jackd1, jackd2, pipewire-jack.

- jackd1 is mostly superseded in favour of jackd2, and as far as I understand, can be ignored

- pipewire-jack integrates well with pipewire and the rest of the Linux audio world

- jackd2 is the native JACK server. When started it handles the sound card directly, and will steal it from pipewire. Non-JACK audio applications will likely cease to see the sound card until JACK is stopped and wireplumber is restarted. Pipewire should be able to keep working as a JACK client but I haven't gone down that route yet

- pipewire-jack mostly works. At some point I experienced glitches in complex JACK apps like giada or ardour that went away after switching to jackd2. I have not investigated further into the glitches

- So: try things with pw-jack. If you see odd glitches, try without pw-jack to use the native jackd2. Keep in mind, if you do so, that you will lose standard pipewire until you stop jackd2 and restart wireplumber.

I have Python code for reading a heart rate monitor.

I have Python code to generate MIDI events.

Could I resist putting them together? Clearly not.

Here's Jack Of Hearts, a JACK MIDI drum loop generator that uses the heart rate for BPM, and an improvised way to compute heart rate increase/decrease to add variations in the drum pattern.

It's very simple minded and silly. To me it was a fun way of putting unrelated things together, and Python worked very well for it.

I had a go at trying to figure out how to generate arbitrary MIDI events and send them out over a JACK MIDI channel.

Setting up JACK and Pipewire

Pipewire has a JACK interface, which in theory means one could use JACK clients out of the box without extra setup.

In practice, one need to tell JACK clients which set of libraries to use to communicate to servers, and Pipewire's JACK server is not the default choice.

To tell JACK clients to use Pipewire's server, you can either:

- on a client-by-client basis, wrap the commands with pw-jack

- to change the system default:

cp /usr/share/doc/pipewire/examples/ld.so.conf.d/pipewire-jack-*.conf /etc/ld.so.conf.d/and runldconfig(see the Debian wiki for details)

Programming with JACK

Python has a JACK client library that worked flawlessly for me so far.

Everything with JACK is designed around minimizing latency. Everything happens around a callback that gets called form a separate thread, and which gets a buffer to fill with events.

All the heavy processing needs to happen outside the callback, and the callback is only there to do the minimal amount of work needed to shovel the data your application produced into JACK channels.

Generating MIDI messages

The Mido library can be used to parse and create MIDI messages and it also worked flawlessly for me so far.

One needs to study a bit what kind of MIDI message one needs to generate (like "note on", "note off", "program change") and what arguments they get.

It also helps to read about the General MIDI standard which defines mappings between well-known instruments and channels and instrument numbers in MIDI messages.

A timed message queue

To keep a queue of events that happen over time, I implemented a Delta List that indexes events by their future frame number.

I called the humble container for my audio experiments pyeep and here's my delta list implementation.

A JACK player

The simple JACK MIDI player backend is also in pyeep.

It needs to protect the delta list with a mutex since we are working across thread boundaries, but it tries to do as little work under lock as possible, to minimize the risk of locking the realtime thread for too long.

The play method converts delays in seconds to frame counts, and the

on_process callback moves events from the queue to the jack output.

Here's an example script that plays a simple drum pattern:

#!/usr/bin/python3

# Example JACK midi event generator

#

# Play a drum pattern over JACK

import time

from pyeep.jackmidi import MidiPlayer

# See:

# https://soundprogramming.net/file-formats/general-midi-instrument-list/

# https://www.pgmusic.com/tutorial_gm.htm

DRUM_CHANNEL = 9

with MidiPlayer("pyeep drums") as player:

beat: int = 0

while True:

player.play("note_on", velocity=64, note=35, channel=DRUM_CHANNEL)

player.play("note_off", note=38, channel=DRUM_CHANNEL, delay_sec=0.5)

if beat == 0:

player.play("note_on", velocity=100, note=38, channel=DRUM_CHANNEL)

player.play("note_off", note=36, channel=DRUM_CHANNEL, delay_sec=0.3)

if beat + 1 == 2:

player.play("note_on", velocity=100, note=42, channel=DRUM_CHANNEL)

player.play("note_off", note=42, channel=DRUM_CHANNEL, delay_sec=0.3)

beat = (beat + 1) % 4

time.sleep(0.3)

Running the example

I ran the jack_drums script, and of course not much happened.

First I needed a MIDI synthesizer. I installed fluidsynth, and ran it on the command line with no arguments. it registered with JACK, ready to do its thing.

Then I connected things together. I used qjackctl, opened the graph view, and connected the MIDI output of "pyeep drums" to the "FLUID Synth input port".

fluidsynth's output was already automatically connected to the audio card and I started hearing the drums playing! 🥁️🎉️

I bought myself a cheap wearable Bluetooth LE heart rate monitor in order to play with it, and this is a simple Python script to monitor it and plot data.

Bluetooth LE

I was surprised that these things seem decently interoperable.

You can use hcitool to scan for devices:

hcitool lescan

You can then use gatttool to connect to device and poke at them interactively

from a command line.

Bluetooth LE from Python

There is a nice library called Bleak which is also packaged in Debian. It's modern Python with asyncio and works beautifully!

Heart rate monitors

Things I learnt:

- The UUID for the heart rate interface starts with

00002a37. - The UUID for checking battery status starts with

00002a19. - A longer list of UUIDs is here.

- The layout of heart rate data packets and some Python code to parse them

- What are RR values

How about a proper fitness tracker?

I found OpenTracks, also on F-Droid, which seems nice

Why script it from a desktop computer?

The question is: why not?

A fitness tracker on a phone is useful, but there are lots of silly things one can do from one's computer that one can't do from a phone. A heart rate monitor is, after all, one more input device, and there are never enough input devices!

There are so many extremely important use cases that seem entirely unexplored:

- Log your heart rate with your git commits!

- Add your heart rate as a header in your emails!

- Correlate heart rate information with your work activity tracker to find out what tasks stress you the most!

- Sync ping intervals with your own heartbeat, so you get faster replies when you're more anxious!

- Configure workrave to block your keyboard if you get too excited, to improve the quality of your mailing list contributions!

- You can monitor the monitor script of the heart rate monitor that monitors you! Forget buffalo, be your monitor monitor monitor monitor monitor monitor monitor monitor...